Build & Test the Snowflake Kafka Connector Plugin

Recently, a random chain of events pushed me to develop a small degree of expertise with Snowflake Kafka Connect sink plugin. While this open-source project has some configuration documentation, and a README, it was not obvious to me how to initiate a build or get one of the tests up and running.

After a bit of trial and error, I wrote down the step-by-step instructions below in case I need to do it again later. This page demonstrates Snowflake Kafka Connector end-to-end test running on a macOS against a freebie Snowflake trial instance. Among the menu of end-to-end tests available, I’ve chosen the Apache End2End Test AWS as it fulfills my goal of testing a particular version of a plugin within a specific Apache Kafka version stack. Here are the prerequisites:

- Snowflake instance

- macOS host

Create private & public keys for Snowflake access

Follow the instructions from Snowflake to create the following key files:

rsa_key.pubrsa_key.p8

Keep your private key passphrase in a environment variable. You’ll need this later to decrypt your p8 key.

export SNOWSQL_PRIVATE_KEY_PASSPHRASE=human*readable*passphrase

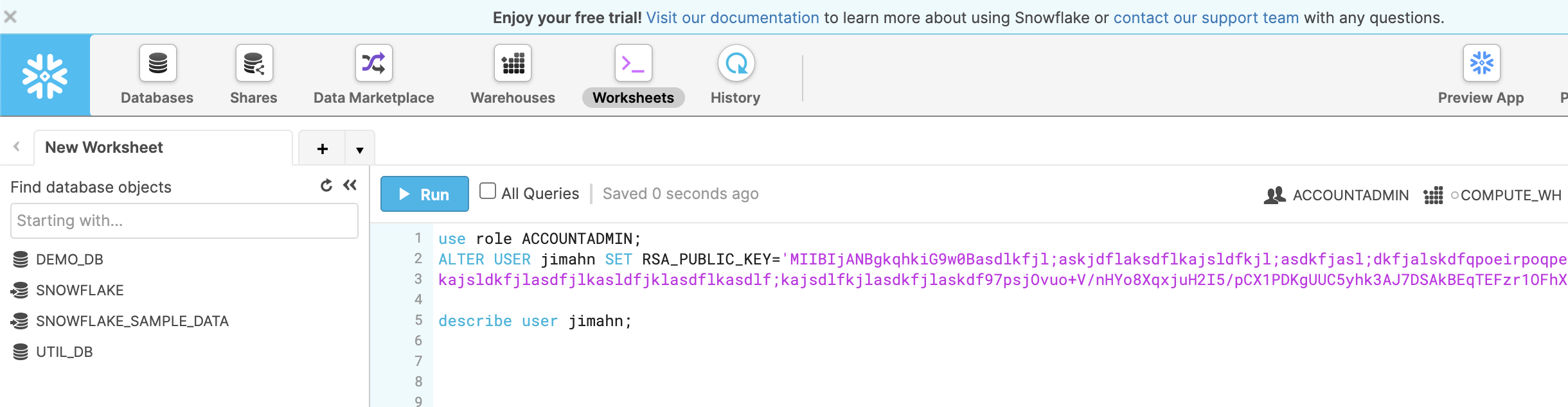

Update Snowflake user with public key

Log into Snowflake instance via your web browser, and update your user’s RSA_PUBLIC_KEY with the key string from file rsa_key.pub.

use role ACCOUNTADMIN;

-- this mile long key string comes from file rsa_key.pub (remove the header/footer and carriage returns)

ALTER USER jimahn SET RSA_PUBLIC_KEY='MIIBIjANBg...';

Test your keys via snowsql

My ~/.snowsql/config file contains a section like below. If you do not have snowsql installed, please see this post.

...

[connections.training]

accountname = ph65577.us-east-2.aws

username = jimahn

warehouse = COMPUTE_WH

rolename = PUBLIC

database = DEMO_DB

schemaname = PUBLIC

...

Invoke snowsql with the connection labeled training and your private key file path set to rsa_key.p8. When prompted, enter human*readable*passphrase. This will allow Snowflake to decrypt your rsa_key.p8 key and grant you entry.

$ snowsql -c training --private-key-path rsa_key.p8

Private Key Passphrase:

* SnowSQL * v1.2.10

Type SQL statements or !help

jimahn#COMPUTE_WH@DEMO_DB.PUBLIC>

Snowflake user and secret setup is now complete. Let’s now embed this into our testing rig.

Arm Tests with Snowflake secrets

Make a copy of profile.json.example as profile.json and enter your Snowflake secrets.

git clone https://github.com/snowflakedb/snowflake-kafka-connector.git

cd snowflake-kafka-connector

cp profile.json.example .github/scripts/profile.json

vi .github/scripts/profile.json # enter your secrets into a plain text file

Mine looks like below:

{

"user": "jimahn",

"private_key": "MIIEvgIBADA..3yFZ",

"encrypted_private_key": "MIIE6TAbB..3o",

"private_key_passphrase": "human*readable*passphrase",

"host": "ph65577.us-east-2.aws.snowflakecomputing.com:443",

"schema": "PUBLIC",

"database": "DEMO_DB",

"warehouse": "COMPUTE_WH"

}

private_key is simply the single-line version of the super-long string we created in file rsa_key.p8.

There’s an odd Java related(?) twist, which requires yet another version of the key as encrypted_private_key. This variant of the key can be created via:

pkcs8 -in rsa_key.p8 -outform pem -out rsa_key.pem

In summary, profile.json requires two different variants the of same private key:

encrypted_private_keyfrom rsa_key.p8 fileprivate_keyfrom rsa_key.pem file

Encrypt profile.json

Now that we have file profile.json prepared, we must encrypt it. This is how secrets are stored within the github repo such that it can then be shared with the tests housed in github actions. Somewhat tedious, but without this step, the private keys would end up being checked-in naked into github.

Install gpg

brew install gnupg

export SNOWFLAKE_TEST_PROFILE_SECRET=human*readable*passphrase

gpg -c .github/scripts/profile.json # generate profile.json.gpg

.github/scripts/decrypt_secret.sh aws # see output in cwd

rm .github/scripts/profile.json # clean up

rm profile.json

Test Execution

After all that, we are now finally ready to get the tests launched. Since the tests are configured to run via github actions, we utilize a nifty utility act to help us execute them locally.

Install act

$ brew install act

$ act -l # list jobs available

ID Stage Name

build 0 build

build 0 build

build 0 build

build 0 build

build 0 build

build 0 build

$

Unfortunately, all five jobs are named build. Let’s rename the desired job from build to build_apache_aws:

sed -i '' 's/build\:/build_apache_aws\:/g' .github/workflows/End2EndTestApacheAws2.yml

$ act -l

ID Stage Name

build_apache_aws 0 build_apache_aws

build 0 build

build 0 build

build 0 build

build 0 build

build 0 build

$

Now we can run our test as job build_apache_aws!

$ act -v -j build_apache_aws -P ubuntu-18.04=nektos/act-environments-ubuntu:18.04 -s SNOWFLAKE_TEST_PROFILE_SECRET=human*readable*passphrase

Successful Build & Test

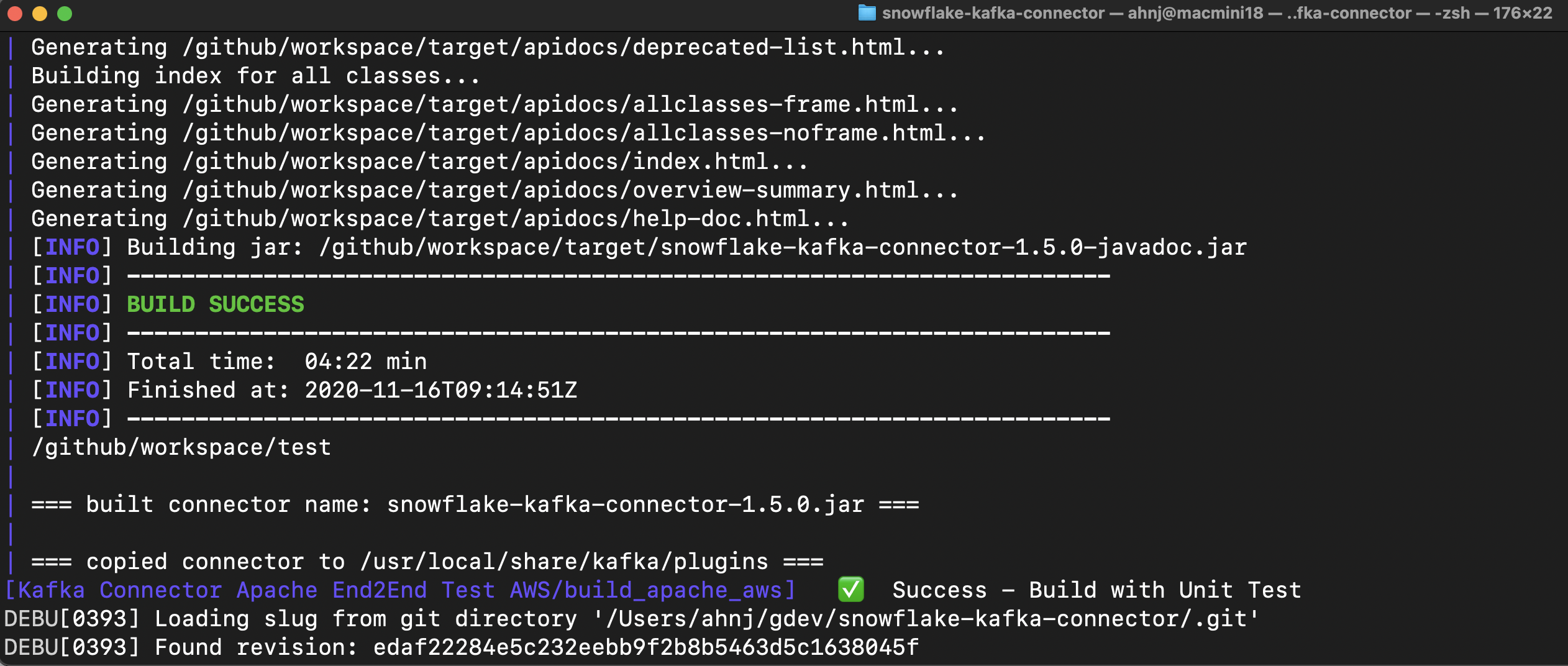

Job build_apache_aws has two primary objectives, first is a build of the plugin jar: snowflake-kafka-connector-1.5.0.jar, followed by a test of the that jar. Successful build of the jar looks like this:

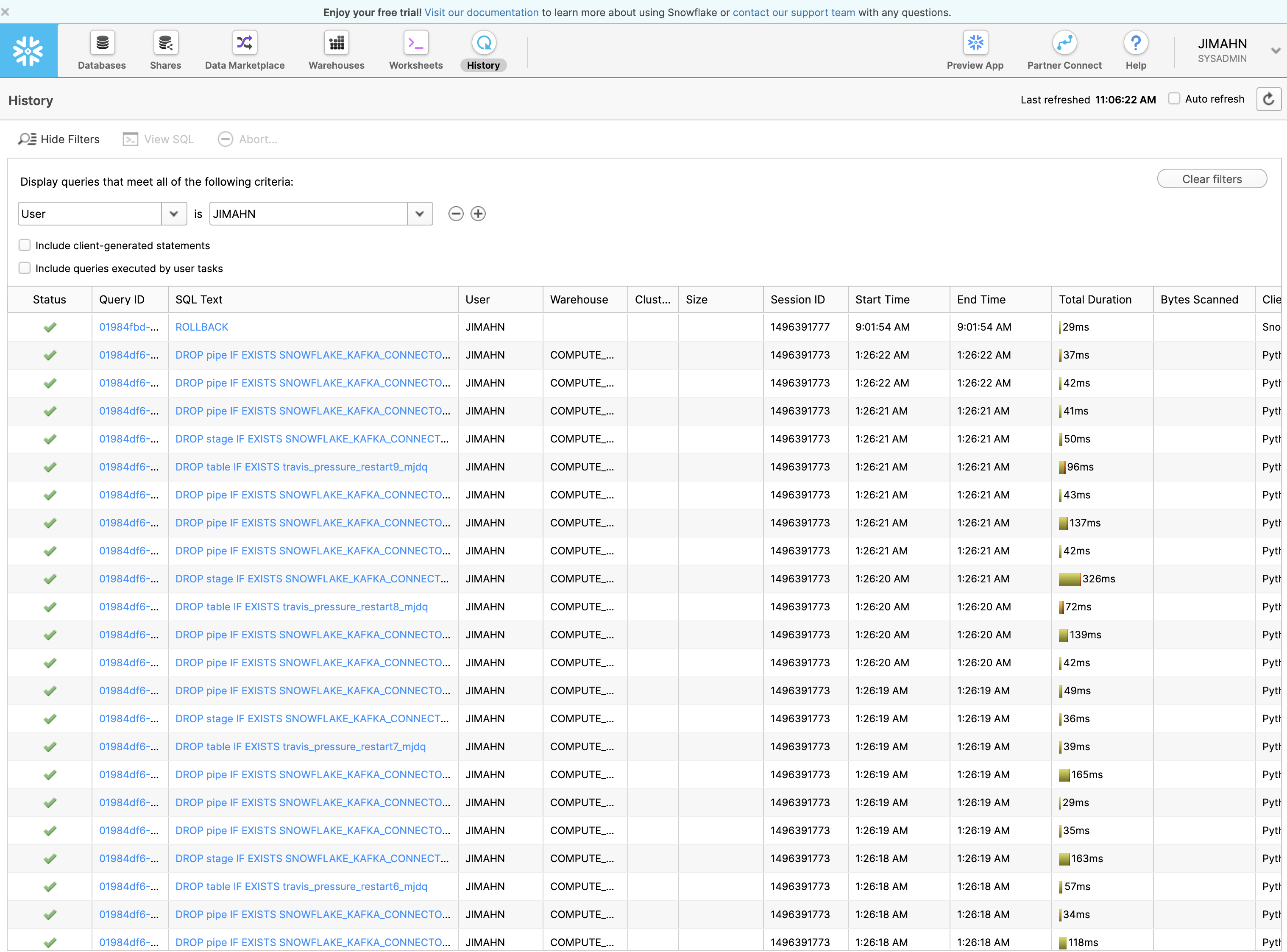

From Snowflake web interface, you should be able to see all of the snowflake operations in action while the test is in flight.

Docker container remains available after the test completion for further inspection.

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7d41fc90f1d3 nektos/act-environments-ubuntu:18.04 "/usr/bin/tail -f /d…" 10 hours ago Up 10 hours act-Kafka-Connector-Apache-End2End-Test-AWS-build-apache-aws

$ docker exec -it 7d41fc90f1d3 /bin/bash

root@docker-desktop:/github/workspace#

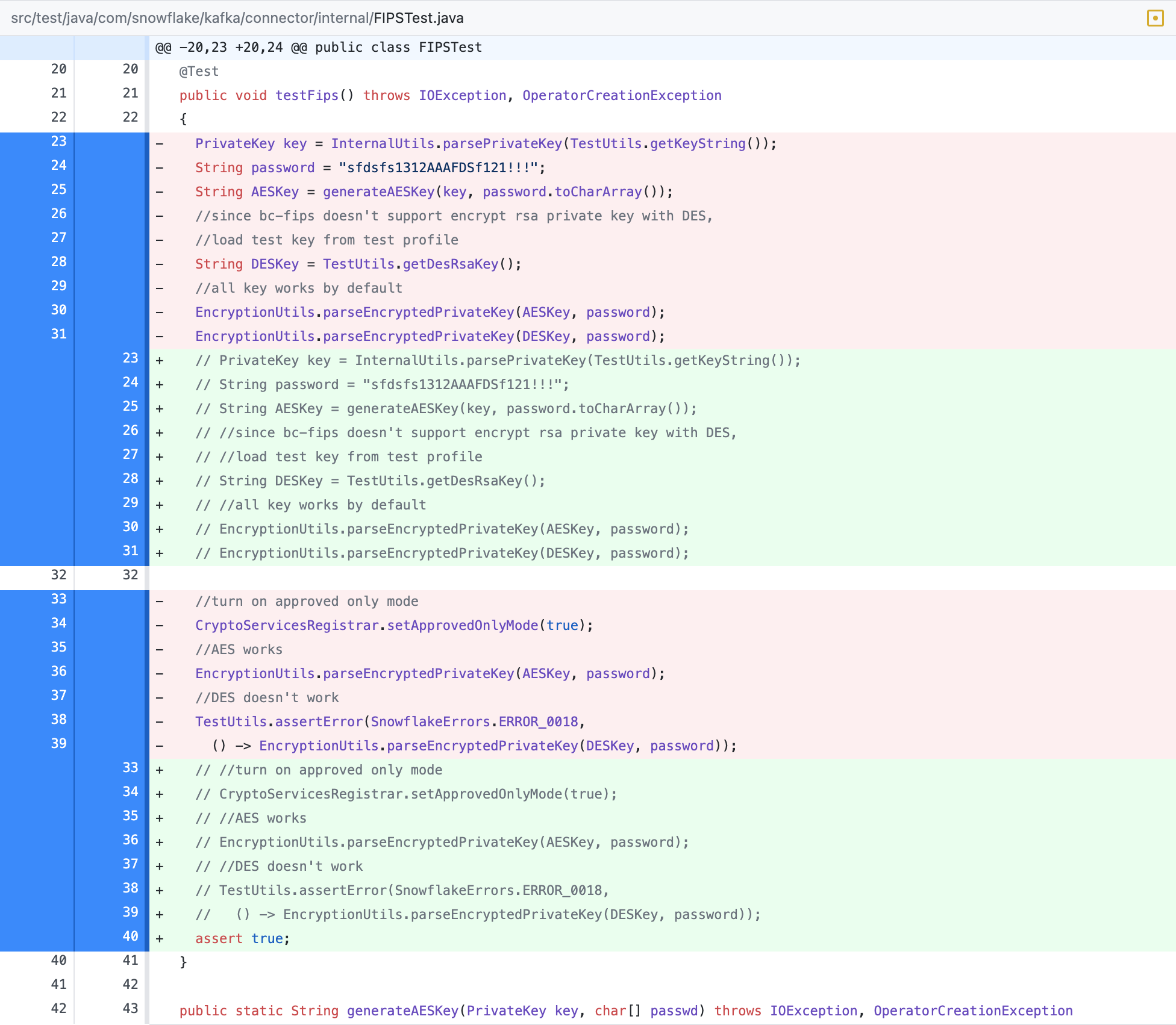

Lastly, to make all the tests pass with the above setup, I had to remove test testFIPS from the test suite. If you know how to remedy this, please share it with me.